Stable Diffusion Web UIで「Error completing request」エラーが出たときの原因と対策を紹介します。

「Error completing request」エラーの原因

「Error completing request」エラーの原因として多いのがメモリ不足です。

画像サイズやサンプリング数を増やすと、その分だけ使用するメモリ量も増加します。

GPU搭載メモリのスペックを超えると「Error completing request」エラーが出てしまうため、解決策としては画像サイズやサンプリング数を下げましょう。

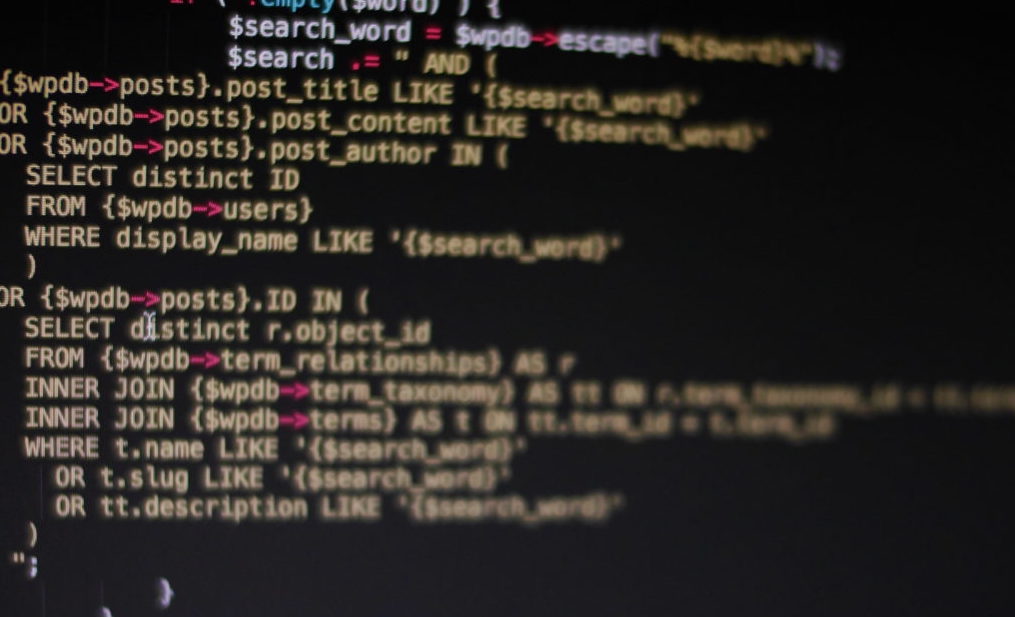

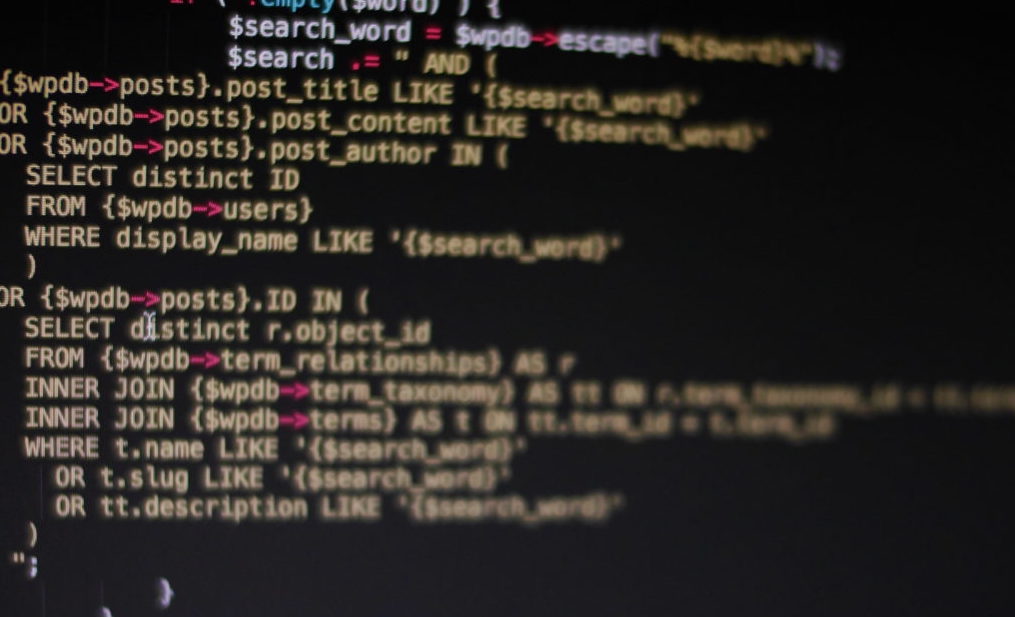

*** Error completing request, 35.20s/it]

*** Arguments: ('task(p70l6u99c08qnyf)', 'プロンプト', [], 20, 'DPM++ 2M Karras', 1, 1, 7, 1024, 512, True, 0.7, 2, 'Latent', 0, 0, 0, 'Use same checkpoint', 'Use same sampler', '', '', [], , 0, False, '', 0.8, -1, False, -1, 0, 0, 0, False, False, 'positive', 'comma', 0, False, False, '', 1, '', [], 0, '', [], 0, '', [], True, False, False, False, 0, False) {}

Traceback (most recent call last):

File "/Users/apps/stable-diffusion-webui/modules/call_queue.py", line 57, in f

res = list(func(*args, **kwargs))

File "/Users/apps/stable-diffusion-webui/modules/call_queue.py", line 36, in f

res = func(*args, **kwargs)

File "/Users/apps/stable-diffusion-webui/modules/txt2img.py", line 55, in txt2img

processed = processing.process_images(p)

File "/Users/apps/stable-diffusion-webui/modules/processing.py", line 732, in process_images

res = process_images_inner(p)

File "/Users/apps/stable-diffusion-webui/modules/processing.py", line 867, in process_images_inner

samples_ddim = p.sample(conditioning=p.c, unconditional_conditioning=p.uc, seeds=p.seeds, subseeds=p.subseeds, subseed_strength=p.subseed_strength, prompts=p.prompts)

File "/Users/apps/stable-diffusion-webui/modules/processing.py", line 1156, in sample

return self.sample_hr_pass(samples, decoded_samples, seeds, subseeds, subseed_strength, prompts)

File "/Users/apps/stable-diffusion-webui/modules/processing.py", line 1249, in sample_hr_pass

decoded_samples = decode_latent_batch(self.sd_model, samples, target_device=devices.cpu, check_for_nans=True)

File "/Users/apps/stable-diffusion-webui/modules/processing.py", line 594, in decode_latent_batch

sample = decode_first_stage(model, batch[i:i + 1])[0]

File "/Users/apps/stable-diffusion-webui/modules/sd_samplers_common.py", line 76, in decode_first_stage

return samples_to_images_tensor(x, approx_index, model)

File "/Users/apps/stable-diffusion-webui/modules/sd_samplers_common.py", line 58, in samples_to_images_tensor

x_sample = model.decode_first_stage(sample.to(model.first_stage_model.dtype))

File "/Users/apps/stable-diffusion-webui/modules/sd_hijack_utils.py", line 17, in

setattr(resolved_obj, func_path[-1], lambda *args, **kwargs: self(*args, **kwargs))

File "/Users/apps/stable-diffusion-webui/modules/sd_hijack_utils.py", line 26, in __call__

return self.__sub_func(self.__orig_func, *args, **kwargs)

File "/Users/apps/stable-diffusion-webui/modules/sd_hijack_unet.py", line 79, in

first_stage_sub = lambda orig_func, self, x, **kwargs: orig_func(self, x.to(devices.dtype_vae), **kwargs)

File "/Users/apps/stable-diffusion-webui/venv/lib/python3.10/site-packages/torch/utils/_contextlib.py", line 115, in decorate_context

return func(*args, **kwargs)

File "/Users/apps/stable-diffusion-webui/repositories/stable-diffusion-stability-ai/ldm/models/diffusion/ddpm.py", line 826, in decode_first_stage

return self.first_stage_model.decode(z)

File "/Users/apps/stable-diffusion-webui/repositories/stable-diffusion-stability-ai/ldm/models/autoencoder.py", line 90, in decode

dec = self.decoder(z)

File "/Users/apps/stable-diffusion-webui/venv/lib/python3.10/site-packages/torch/nn/modules/module.py", line 1501, in _call_impl

return forward_call(*args, **kwargs)

File "/Users/apps/stable-diffusion-webui/repositories/stable-diffusion-stability-ai/ldm/modules/diffusionmodules/model.py", line 641, in forward

h = self.up[i_level].upsample(h)

File "/Users/apps/stable-diffusion-webui/venv/lib/python3.10/site-packages/torch/nn/modules/module.py", line 1501, in _call_impl

return forward_call(*args, **kwargs)

File "/Users/apps/stable-diffusion-webui/repositories/stable-diffusion-stability-ai/ldm/modules/diffusionmodules/model.py", line 64, in forward

x = self.conv(x)

File "/Users/apps/stable-diffusion-webui/venv/lib/python3.10/site-packages/torch/nn/modules/module.py", line 1501, in _call_impl

return forward_call(*args, **kwargs)

File "/Users/apps/stable-diffusion-webui/extensions-builtin/Lora/networks.py", line 444, in network_Conv2d_forward

return originals.Conv2d_forward(self, input)

File "/Users/apps/stable-diffusion-webui/venv/lib/python3.10/site-packages/torch/nn/modules/conv.py", line 463, in forward

return self._conv_forward(input, self.weight, self.bias)

File "/Users/apps/stable-diffusion-webui/venv/lib/python3.10/site-packages/torch/nn/modules/conv.py", line 459, in _conv_forward

return F.conv2d(input, weight, bias, self.stride,

RuntimeError: MPS backend out of memory (MPS allocated: 4.67 GB, other allocations: 2.58 GB, max allowed: 9.07 GB). Tried to allocate 2.00 GB on private pool. Use PYTORCH_MPS_HIGH_WATERMARK_RATIO=0.0 to disable upper limit for memory allocations (may cause system failure).

関連ページ

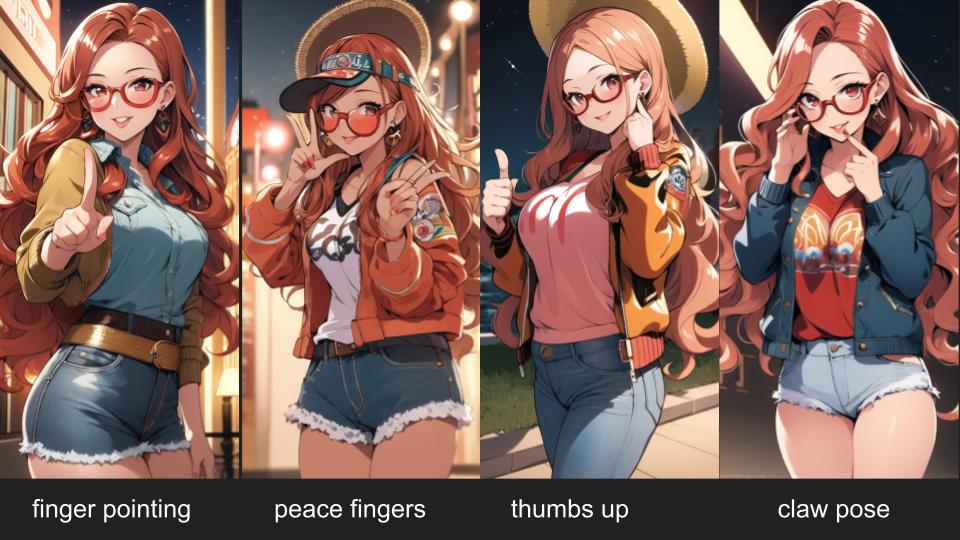

構図や目の色、表情など、より細かく自分好みにするためのプロンプト(呪文)で解説しています。

画像生成AIでよく使うプロンプト・呪文集

Stable Diffusion Web UI、にじジャーニーなどの画像生成AIでよく使うプロンプト(呪文)をまとめました。

画像生成AIとは?初心者向け解説

画像生成AIとは?イラストや実写真の作り方など、基本的な使い方から応用例についてに初心者向けにまとめました。

コメント

ソースコードは載っていますが、対応策の記述はどこにありますか?

すいません、対応策の文章が消えておりましたので修正しました。